Universal Robots (UR5) real-time teleoperation

May – August 2023

Motivation: To reduce the time taken to collect demonstrations for Imitation Learning

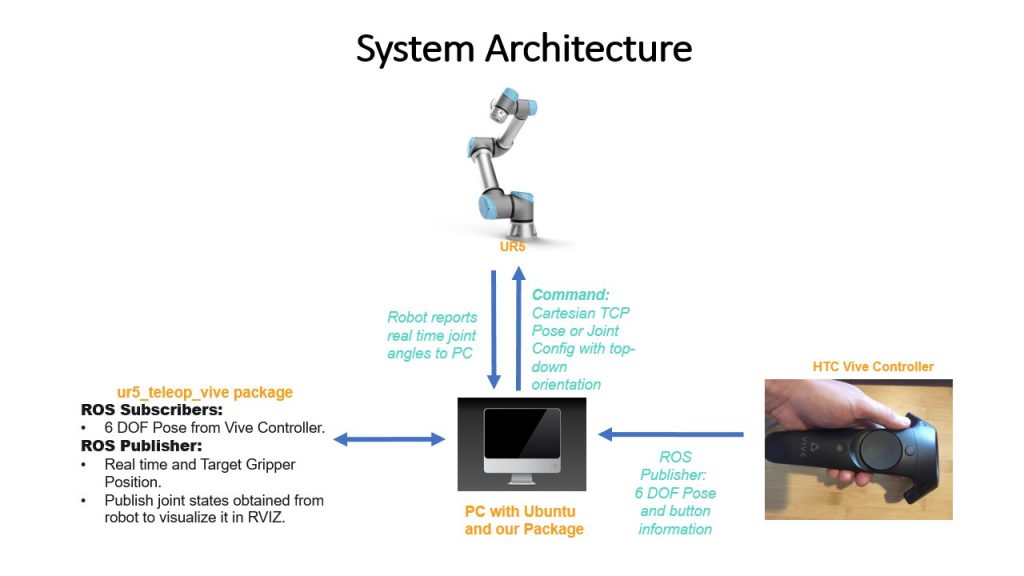

Objective: Control Universal Robots (UR5) in real-time using a Virtual Reality Controller

Tools and Resources:

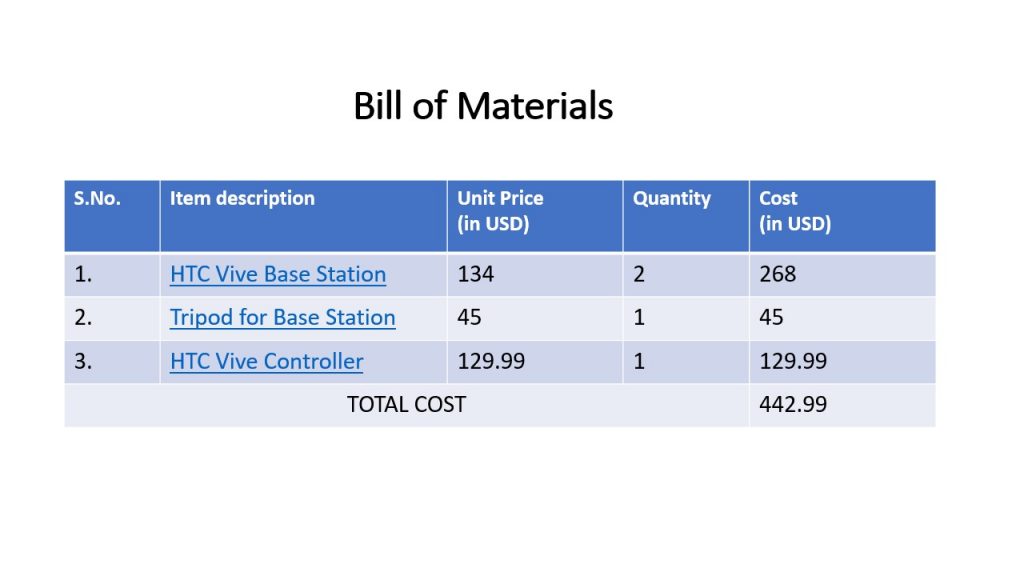

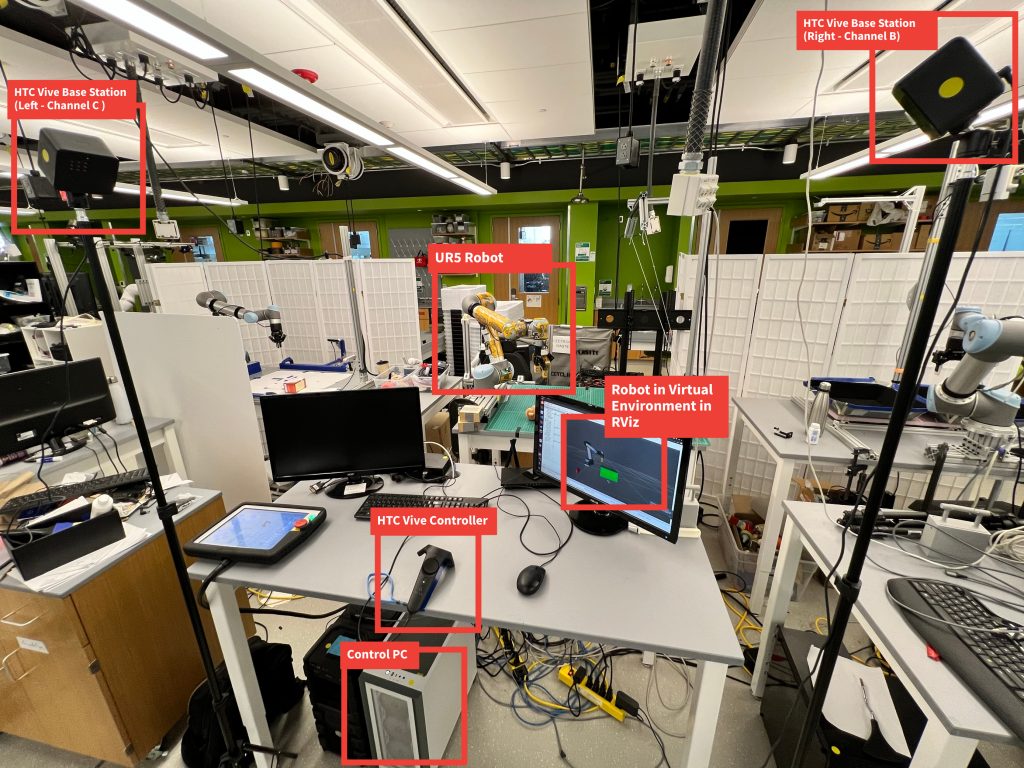

- UR5 Robot

- HTC Vive Base Stations x 2

- HTC Vive Controller x 1

- Ubuntu 20.04

- ROS1 Noetic

Details: In my last semester at Northeastern, I worked with Professor Robert Platt on an Imitation learning problem for robotic manipulators. For this, the robot, Universal Robots (UR5), needed to be operated in real-time by providing it with a cartesian pose. Earlier, people in the lab used to do this using a PS4 controller, which took around 40 minutes to record a pick/ place action as the user needed to select the relative pose again and again, which might have involved human error as well. In that case, it was required to start all over again. I and my friend Siddharth solved this problem by obtaining the pose information from the HTC Vive Controller, which is commonly used by gamers to play AR/ VR games, and then passed that information to the navigation stack developed by us. The project worked awesome, and we achieved the smoothest movement ever for the UR5 robot within the entire floor of our robotics research lab(s). Using this method, we were able to record the pick and place action in about 1 minute, a 97.5% reduction in time.

What didn’t work?

- MoveIt – compute_cartesian_path and go method (didn’t work in realtime)

- MoveIt servo – Faced approaching joint limit and singularity issue

- Orocos KDL IK Solver – Motion was not smooth and unnatural as well